Working with NIfTI images#

NIfTI stands for Neuroimaging Informatics Technology Initiative, which is jointly sponsored by the US National Institute of Mental Health and the National Institute of Neurological Disorders and Stroke. NIfTI defines a file format for neuroimaging data that is meant to meet the needs of the fMRI research community. In particular, NIfTI was developed to support inter-operability of tools and software through a common file format. Prior to NIfTI there were a few major fMRI analysis software packages, and each used a different file format. NIfTI was designed to serve as a common file format for all of these (and future) neuroimaging software packages.

NIfTI was derived from an existing medical image format, called ANALYZE. ANALYZE was originally developed by the Mayo Clinic in the US, and was adopted by several neuroimaging analysis software packages in the 1990s. The ANALYZE header (where meta-data are stored) had extra fields that were not used, and NIfTI format basically expands on ANALYZE by using some of those empty fields to store information relevant to neuroimaging data. In particular the header stores information about the position and orientation of the images. This was a huge issue prior to NIfTI. In particular, there were different standards for how to store the order of the image data. For example, some software packages stored the data in an array that started from the most right, posterior, and inferior voxel, with the three spatial dimensions ordered right-to-left, posterior-to-anterior, and then inferior-to-superior. This is referred to as RPI orientation. Other packages that also used ANALYZE data stored the voxels in RAI format (with the second dimension going anterior-to-posterior) or LPI format (reversing left and right). This caused a lot of problems for researchers, especially if they wanted to try different analysis software, or use a pipeline that involved tools from different software packages. In some cases, this was just annoying (e.g., having to reverse the anterior-posterior dimension of an image). In other cases, it was confounding and potentially created erroneous results. This was especially true of the right-left (x) dimension. While it is immediately obvious when viewing an image which the front and back, and top and bottom, of the brain are, the left and right hemispheres are typically indistinguishable from eahc other, so a left-right swap could easily go undetected, potentially leading researchers to make completely incorrect conclusions about which side of the brain activation occurred on! The NIfTI format was designed to help prevent this by more explicitly storing orientation information in the header.

Another improvement with the NIfTI format was to allow a single file. ANALYZE format requires two files, a header (with a .hdr extension) and the image data itself (.img). These files had to have the same name prior to the extension (e.g., brain_image.hdr and brain_image.img), and doubled the number of files in a directory of images, which created more clutter. NIfTI defines a single image file ending in a .nii extension. As well, NIfTI images can be compressed using a standard, open-source algorithm known as Gzip, which can significantly reduce file sizes and thus the amount of storage required for imaging data. Since neuroimaging data files tend to be large, this compression was an important feature.

Although other file formats are still used by some software, NIfTI has become the most widely used standard for fMRI and other MRI research data file storage. Here we will learn how to convert a DICOM file to NIfTI format, which is typically the first step in an MRI research analysis pipeline, since most MRI scanners produce DICOM files, but the software researchers use to process their data reads NIFTI and not DICOM format.

Import packages#

Here we load in three new Python packages designed to work with NIfTI data:

dicom2nifticonverst DICOM images to NIfTI formatNiBabelreads and converts between NIfTI and several other common neuroimaging file formats, including ANALYZENiLearnis primarily designed to provide statistical analysis and machine learning tools for neuroimaging data. However, it also provides a number of utilities for reading and writing NIfTI images, and working with and visualizing data

As well we’ll load SciPy’s ndimage package, and Matplotlib

import dicom2nifti

import nibabel as nib

import nilearn as nil

import scipy.ndimage as ndi

import matplotlib.pyplot as plt

import os

We will use dicom2nifti’s convert_directory() function to convert the structural MRI images we worked with in the previous lesson from DICOM to NIfTI. We pass it the name of the folder in which the DICOM images are saved, and also instruct it to compress the resulting NIfTI file (to save space). We also use the reorient=True kwarg to force the image to be written in LAI orientation (i.e., starting with the most left, anterior, and inferior voxel), which ensures there is no ambiguity about the resulting NIfTI image.

convert_directory does not take an argument for the output file name. Instead, it uses the name of the scan that was used when it was acquired on the MRI scanner. This might seem like a frustrating lack of control, however it does ensure that there are no user errors in the conversion process, that could result in mis-identified files. Here we will first list the contents of the data folder, then run convert_directory, then list the contents again to see the new NIfTI file and what it is named:

os.listdir('data')

['.DS_Store',

'4_sag_3d_t1_spgr.nii.gz',

'anatomical_aug13_001.hdr',

'Anat001.20040930.145131.5.T1_GRE_3D_AXIAL.0099.dcm',

'anatomical_aug13_001.img',

'DICOM']

dicom2nifti.convert_directory('data/DICOM', 'data', compression=True, reorient=True)

os.listdir('data')

Unable to read: data/DICOM/IM-0004-0159.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0171.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0165.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0039.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0005.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0011.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0010.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0004.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0038.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0164.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0170.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0158.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0166.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0172.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0012.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0006.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0007.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0013.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0173.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0167.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0163.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0177.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0017.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0003.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0002.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0016.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0176.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0162.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0174.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0160.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0148.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0014.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0028.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0029.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0015.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0001.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0149.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0161.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0175.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0112.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0106.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0066.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0072.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0099.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0098.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0073.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0067.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0107.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0113.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0139.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0105.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0111.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0059.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0071.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0065.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0064.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0070.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0058.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0110.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0104.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0138.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0100.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0114.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0128.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0074.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0060.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0048.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0049.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0061.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0075.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0129.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0115.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0101.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0117.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0103.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0063.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0077.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0088.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0089.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file

if _is_compressed(dicom_file, force):

~~~~~~~~~~~~~~^^^^^^^^^^^^^^^^^^^

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 110, in _is_compressed

header = pydicom.read_file(dicom_file,

^^^^^^^^^^^^^^^^^

AttributeError: module 'pydicom' has no attribute 'read_file'

Unable to read: data/DICOM/IM-0004-0076.dcm

Traceback (most recent call last):

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/convert_dir.py", line 46, in convert_directory

dicom_headers = compressed_dicom.read_file(file_path,

defer_size="1 KB",

stop_before_pixels=False,

force=dicom2nifti.settings.pydicom_read_force)

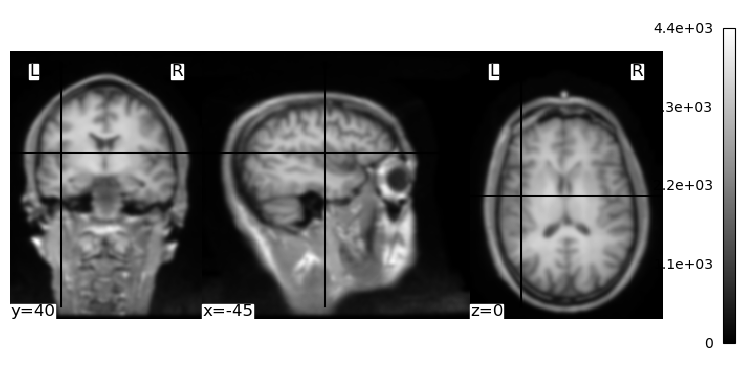

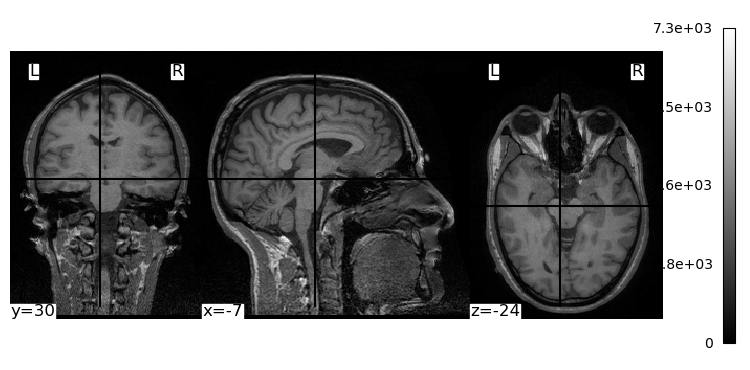

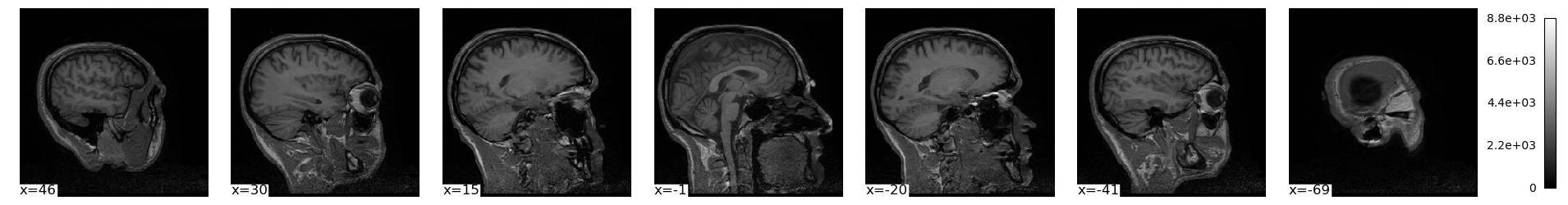

File "/Users/aaron/miniforge3/envs/neural_data_science/lib/python3.13/site-packages/dicom2nifti/compressed_dicom.py", line 15, in read_file